Robots are getting a massive upgrade thanks to the rise of generative AI. This cutting-edge technology is giving our mechanical friends unprecedented smarts – allowing them to see, learn, adapt, and even create in wildly humanlike ways.

At its core, generative AI uses deep learning models to process vast pools of data and then generate brand new, realistic outputs like images, text, audio and more. When integrated into robotics systems, this AI can supercharge capabilities across the board.

Imagine robots that can instantaneously map their surroundings in photorealistic 3D, plan optimal movements with precision choreography, or even hold flowing conversations. With generative AI, they can perceive their environment like never before, acquire skills independently through experiences, and seamlessly interface with humans.

This game-changing tech is already revolutionizing fields like manufacturing, healthcare, and space exploration. Autonomous robots can take on increasingly complex tasks, navigating unpredictable settings while continuing to learn and evolve.

The market’s rapid expansion reflects generative AI’s immense potential. Researchers predict this space will swell from $7.9 billion in 2022 to $42.7 billion by 2027 at a blistering 40% annual growth rate.

So get ready to witness AI-powered robots that challenge what we thought possible from machines. With their generative intelligence unleashed, the future of robotics has been revolutionized.

What Are AI-Powered Robots?

Forget those clunky old robots – the new breed is bordering on bionic. AI-powered robots are like cyborgs, fusing advanced sensors with artificial intelligence to create hyper-aware, quick-witted machines. They’re packing vision that sees in 3D, vibration detectors that sense the slightest movements, and environmental scanners to map their surroundings in real-time.

Feed all that data into their AI brain, and these robots can instantly comprehend their world, identify objects and threats, and dynamically decide how to operate. From factories to hospitals, these smart part-man-part-machine robots are changing what’s possible through their uncanny awareness and decision-making prowess.

Difference between Traditional Robot and AI-Powered Robot

| Aspect | Traditional Robotics | AI (Artificial Intelligence) |

| Definition | Machines designed to physically interact with the world. | Programs designed to process data, make decisions, and learn from experiences. |

| Primary Function | Performing physical tasks such as assembling products or performing surgeries with high precision and efficiency. | Analyzing data, identifying patterns, and making complex decisions dynamically. |

| Examples | Assembly line robots constructing cars, surgical robots performing procedures. | Systems guiding surgical decisions, algorithms for trading stocks on Wall Street. |

| Characteristics | Known for physical capabilities, excelling in repetitive, high-speed tasks to boost productivity. | Known for cognitive capabilities, versatility in processing information and making informed decisions. |

| Origins | Emerged from the industrial age, with the term “robot” coined in 1921, focusing on refining physical design. | Conceptualized in 1956, with rapid evolution driven by theoretical advancements and implementations by startups. |

| Role in Integration | Serves as the “body” that executes tasks. | Acts as the “mind” that plans, perceives, and controls. |

| Future Outlook | Increasingly incorporating AI to become more autonomous and efficient. | Continues to evolve, increasingly integrating with robotics to enhance both cognitive and physical capabilities. |

Current Landscape of AI-Powered Robotics

The present landscape of AI and robotics is marked by unprecedented advancements. Machine learning algorithms, deep neural networks, and sophisticated sensors have propelled AI to new heights, enabling machines to understand, learn, and make decisions with increasing autonomy. In tandem, robotics has evolved beyond traditional industrial applications, embracing collaborative and adaptive technologies.

HD Atlas (Boston Dynamics)

- Advanced Mobility: The HD Atlas demonstrated remarkable agility, capable of performing complex maneuvers like running, jumping, and even backflips, showcasing its ability to mimic and exceed human movements

- Dynamic Balancing: The robot could keep its balance when jostled or pushed, and was capable of getting up if it fell over, highlighting its dynamic stabilization capabilities

- Whole-Body Skills: The HD Atlas used whole-body skills to move quickly and balance dynamically, allowing it to lift and carry objects like boxes and crates with ease

- Outdoor and Indoor Operation: Designed to operate both outdoors and inside buildings, the HD Atlas could navigate rough terrain autonomously or under teleoperation

- Mobile Manipulation: The robot had two-handed mobile manipulation capabilities, enabling it to interact with and manipulate objects in its environment

Figure 01

Figure 1 is a humanoid robot developed by Figure in collaboration with OpenAI, are diverse and cutting-edge.

- Advanced Mobility: Figure 01 boasts remarkable agility, capable of performing complex maneuvers like running, jumping, and backflips, showcasing its human-like movement capabilities

- Visual Language Model (VLM): The robot utilizes a Visual Language Model developed by Figure in collaboration with OpenAI, enabling it to engage in full conversations with people and exhibit high-level visual and language intelligence

- Autonomous Decision-Making: Figure 01 operates autonomously, making decisions based on external stimuli without the need for human intervention, setting it apart from other humanoid robots like OpenAI’s Optimus

- Bimanual Manipulation: The robot demonstrates intricate bimanual manipulation skills, allowing it to interact with and manipulate objects with precision, showcasing its dexterity and control capabilities

- Human-Robot Interaction: Through speech-to-speech reasoning powered by OpenAI’s VLM, Figure 01 engages in natural conversations with humans, comprehending complex visual and textual cues, and responding with human-like dexterity, revolutionizing human-robot interaction

Phoenix (Sanctuary AI)

Phoenix (Sanctuary AI) is designed for highly interactive environments, Phoenix likely incorporates advanced AI to manage complex interactions and tasks.

- Physical Strength and Dexterity:

Phoenix stands at 170 cm and can carry up to 25 kg. Equipped with 20 DoF robotic hands for precise manipulation

- Human-Like Intelligence:

Runs on Sanctuary AI’s Carbon AI control system. Mimics human behavior and understands natural language

- Rapid Learning and Adaptability:

Can learn new tasks in 24 hours. Improved visual acuity, tactile sensing, and training data capture

- Autonomous Operation:

Observes, assesses, and acts on tasks efficiently. Supports human supervision, fleet management, and remote operation

- Enhanced Task Automation:

Reduced task automation time from weeks to 24 hours. Improved hardware, software, uptime, and build time for increased flexibility and durability

Digit (Agility Robotics)

Digit (Agility Robotics) known for its versatility in navigation and carrying abilities, making it suitable for logistics and delivery applications.

- Autonomous Navigation: Digit can navigate through spaces designed for humans, using LiDAR, cameras, and an inertial measurement unit (IMU) for autonomous and teleoperated navigation

- Multi-Purpose Functionality: The robot is designed to perform a variety of tasks, including bulk material handling within warehouses and distribution centers, and can adapt to different workflows and seasonal shifts

- Human-Centric Design: Digit is uniquely designed to operate in harmony with humans within the same space, with a carrying capacity of 35 pounds, and is built to work in spaces designed for people without requiring costly retrofitting

- Advanced Sensors and Vision: The robot features four Intel RealSense depth cameras for object and environment recognition, and its “eyes” display where it’s going, enhancing safety and collaboration with humans

- Enhanced Operational Efficiency: Digit is positioned to significantly elevate operational efficiency in diverse industries, particularly in delivery, logistics, and manufacturing, by executing intricate tasks, alleviating physical strain on human workers, and catalyzing enhanced safety and precision across various settings

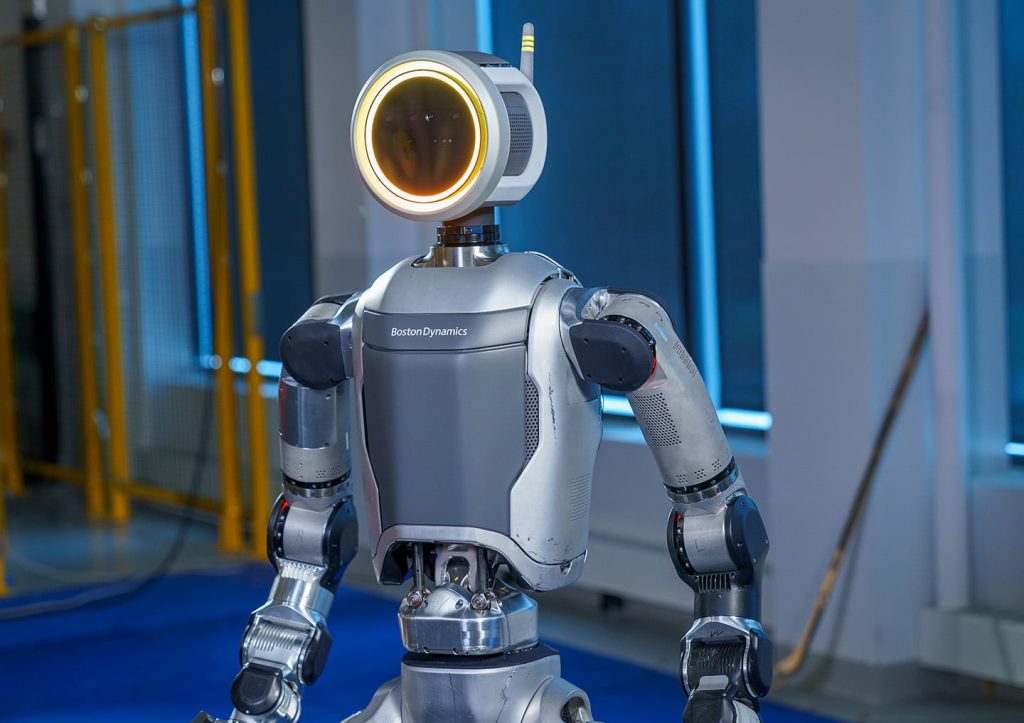

Atlas (Boston Dynamics)

Atlas (Boston Dynamics) is a well-known figure in robotics, capable of running, jumping, and performing complex maneuvers, often used in research and rescue operations.

- Advanced Mobility and Agility: Atlas can perform complex maneuvers like running, jumping, backflips, and parkour, showcasing its human-like agility and ability to navigate rough terrain

- Dynamic Balancing: The robot can maintain balance when pushed or jostled, and is capable of getting up if it falls over, demonstrating advanced dynamic stabilization

- Whole-Body Manipulation: Atlas uses its whole body to lift and carry objects like boxes and crates, leveraging its strength and dexterity for mobile manipulation tasks

- Autonomous Operation: The robot can operate both outdoors and indoors, navigating environments autonomously or under remote control using its onboard sensors and computers

- Collaboration with Humans: Atlas is designed to work alongside humans in shared spaces, with the latest electric model featuring enhanced safety features and a more human-like form factor for seamless integration

H1 (Unitree)

H1 (Unitree) is a part of a new generation of humanoid robots focusing on cost-effective designs, aiming to make robotic technology more accessible.

- Dynamic Mobility and Balancing: The Unitree H1 series, including V1.0 and V2.0, showcased basic mobility and balancing skills, with the latest V3.0 demonstrating a massive dynamic leap in robotics, breaking the world record for the fastest full-sized humanoid robot

- Speed and Agility: The Unitree H1 Evolution V3.0 achieved a maximum walking speed of 3.3m/s, surpassing previous records and showcasing exceptional speed and agility for a full-sized humanoid robot

- Whole-Body Coordination: The H1 V3.0 exhibits flexible and dynamic movements, including coordinated dance routines, demonstrating the robot’s ability to mimic complex human motions with whole-body coordination

- Power and Performance: Equipped with advanced actuation and high-performance robot AI, the Unitree H1 Evolution V3.0 achieves impressive power and speed, setting new standards in robotic advancements and potential applications

- Autonomous Operation and Flexibility: The Unitree H1 possesses stable gait and highly flexible movement capabilities, enabling it to walk and run autonomously in complex terrains and environments. It also features 360° depth perception for enhanced spatial awareness and obstacle avoidance

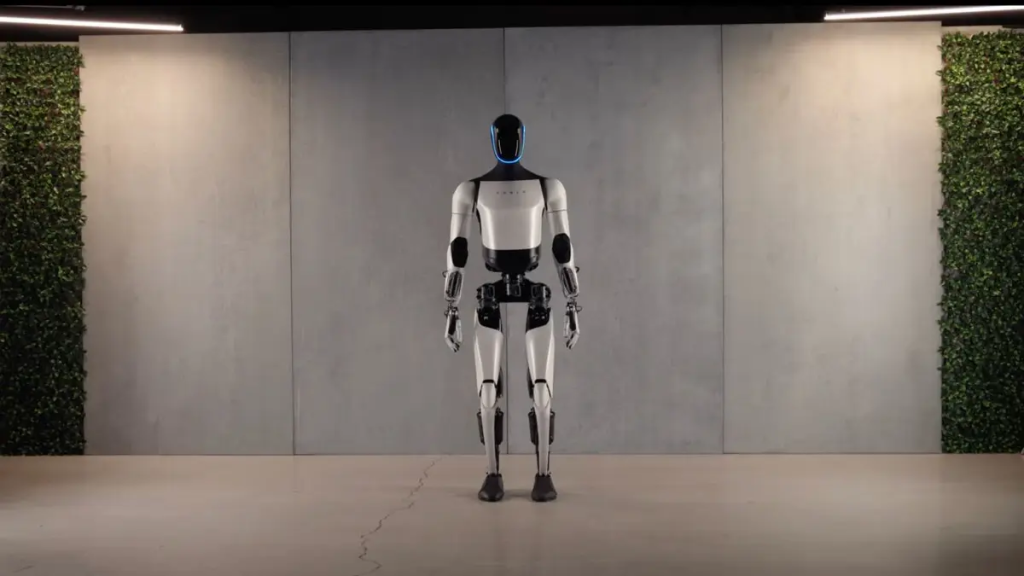

Optimus Gen 2 (Tesla)

Optimus Gen 2 (Tesla) is newer iteration in Tesla’s line of robots, potentially focusing on manufacturing and general-purpose tasks, emphasizing Elon Musk’s vision of a highly versatile utility robot.

- Improved Mobility: Optimus Gen 2 features a 30% increase in walking speed compared to previous models, enhancing its overall mobility and agility

- Enhanced Dexterity: The robot is equipped with 11 degrees of freedom in its hands, enabling delicate and precise movements for tasks requiring fine manipulation and object handling

- Human-Like Gestures: Optimus Gen 2 can replicate human-like gestures, showcasing advanced capabilities in mimicking human movements and interactions

- Weight Reduction: The robot is 10 kg lighter than previous models, without compromising its abilities, demonstrating improved efficiency and maneuverability

- Learning and Adaptation: Optimus Gen 2 can acquire new skills by observing humans, utilizing artificial intelligence similar to Tesla’s FSD technology to track movements and learn from interactions, showcasing its adaptive learning capabilities

Components of AI Powered Robot

- Power Supply – The working power to the robot is provided by batteries, hydraulic, solar power, or pneumatic power sources.

- Actuators – Actuators are the energy conversion device used inside a robot. The major function of actuators is to convert energy into movement.

- Electric motors (DC/AC)– Motors are electromechanical components used for converting electrical energy into its equivalent mechanical energy. In robots motors are used for providing rotational movement.

- Sensors – Sensors provide real time information on the task environment. Robots are equipped with a tactile sensor that imitates the mechanical properties of touch receptors of human fingerprints and a vision sensor is used for computing the depth in the environment.

- Controller – Controller is a part of a robot that coordinates all motion of the mechanical system. It also receives input from the immediate environment through various sensors. The heart of the robot’s controller is a microprocessor linked with the input/output and monitoring device. The command issued by the controller activates the motion control mechanism, consisting of various controllers, actuators and amplifiers.

- Deep learning: Deep learning is an advanced evolution of machine learning, utilizing deep neural networks with potentially billions of parameters. Unlike traditional machine learning that handles simple input vectors, deep learning processes complex data like images, speech, and text. This capability allows it to unravel intricate patterns hidden in massive datasets, significantly enhancing the machine’s learning and predictive abilities.

- Reinforcement Learning (RL) stands out as a specialized subset of machine learning, particularly well-suited for robotics. This method mirrors the trial-and-error learning humans use, where a system iteratively improves itself by receiving feedback—either positive or negative—on its actions. An illustrative example of RL in action is teaching legged robots to walk autonomously, a process that can be accomplished in as few as 10 hours. Through RL, robots not only execute tasks but also refine their skills over time, becoming more efficient and effective at navigating their environments.

- Computer Vision. Relying on data science and data labeling, machines are trained to perceive images and video they capture – that is identify, detect, and classify different objects from this visual content. Powered with CV models, robots can detect movements, recognize faces and emotions, inspect the surroundings, and so on.

- NLP, ASR, TTS. The Natural Language Processing technology provides robotic systems with the ability to perceive and interpret text written in human language. In combination with Automatic Speech Recognition, computer systems can recognize human speech. This way, robots can not only understand direct commands but also extract meaning from the variations of human expressions, perform sentiment analysis, and come up with a relevant text response, or a verbal one with the help of Speech Synthesis.

Groundbreaking Applications of AI in Robotics

AI in robotics aims to create an intelligent environment in the robotics industry for better automation. It uses computer vision techniques, intelligent programming, and reinforced learning to teach robots to make human-like decisions and execute tasks in dynamic conditions.

We’ll cover the following use cases of AI in robotics:

| Category | Robot Type | Benefits |

| Manufacturing | Quality Control | Improved product quality, Reduced human intervention |

| Collaborative Robots | Improved safety, Boosted productivity | |

| Autonomous Robots | Increased human safety, Improved precision | |

| Assembly Robots | Accident and injury prevention, Workflow optimization | |

| Aerospace | Autonomous Rover | More efficient Mars’ surface research, Increase object identification |

| Robotic Companions | Improved work experience and efficiency for astronauts | |

| Advanced Drones | More successful and safer rescue missions, Better effort prioritization | |

| Transportation and Delivery | Advanced Drones | Improved navigation and route planning, Increased delivery speed |

| Agriculture | Advanced Drones | Optimized crop yields, Reduced waste, Reduced labor costs |

| Healthcare | Robotic Assistants | Improved patient care, Performance increase for medical staff |

| Robotic Surgery | Reduced risk of complications for the patient, Greater precision | |

| Service Robots | Personalized patient experience, Improved patient’s emotional state | |

| Customer Service | Social Robots | Increased customer engagement and loyalty |

| Service Robots | Increased product and service delivery speed | |

| MilTech | Unmanned Aerial Vehicles | Safer reconnaissance and surveillance, Efficient bomb disposal |

| Unmanned Ground Vehicles | Safe demining operations | |

| Smart Home | Service Robots | Better support for people with limited abilities, Reduced effort for house chores |

| Personal Robotic Assistants, Social Robots | Increased personal efficiency, Learning companion for kids |

Benefits of Integrating Generative AI in Robotics

The fusion of Artificial Intelligence with Robotics is unlocking unparalleled opportunities across industries, governance, and personal use, setting the stage for a significant economic transformation. By the decade’s close, AI is expected to contribute to over a quarter of the global GDP, underscoring its pivotal role in future economic landscapes. The AI-driven robotics sector, in particular, is on a trajectory of rapid growth. Between 2024 and 2030, it’s forecasted to expand at an impressive annual rate of 11.63%, culminating in a market valuation of US$36.78 billion by 2030.

- Enhanced Efficiency: Powered with AI, robots function autonomously or semi-autonomously which means they no longer need to be controlled by an operator all the time. Thus business owners can finally delegate time-consuming, repetitive, and mundane tasks to robots, freeing up their human resources for higher-level work that requires skills robots cannot provide. Also, autonomous robots can work 24/7, without taking shifts, lunch breaks, sick leaves, and vacations, ensuring uninterrupted service and product delivery.

- Improved Accuracy: The AI technologies mentioned above, especially deep learning, reinforcement learning, and sensor fusion contribute greatly to increasing the accuracy of intelligent machines. With self-learning algorithms enabling them to learn from experience and advanced sensor data processing in real-time, dexterous robots can manipulate their limbs and adapt grip force well enough to cut and cook food, assemble small and fragile mechanisms, and even perform open-heart surgeries with better precision than humans.

- Increased Productivity: This advantage of Artificial Intelligence for Robotics comes logically with the prior ones – more efficient and accurate work, in the long run, brings faster results with fewer defects and losses. Additionally, machines’ work efficiency won’t be affected by physically challenging conditions, such as extreme temperatures, heights, weight loads, or lack of light and fresh air.

- Risk Reduction and Safety: Hybrid automation and AI in robotics are game-changing for domains and environments with increased risks for human health and life. Such critical activities as working with hazardous chemicals and organisms in labs, rescue operations in dangerous locations, and aid in military and anti-terrorist missions can become much safer. Also, intelligent robotic systems increase worker safety in potentially harmful environments, such as plants and factories, or ensure patient wellness making high-precision procedures less invasive.

- Cost Savings: An AI-powered robotic system may look like a bulky investment, but it is a one-time investment. It won’t ask you for salary increases, bonuses, perks, and social benefits. A fleet of robots in a warehouse or at a manufacturing facility can do the job of dozens of workers with increased efficiency and precision while it will only need a handful of specialists for support and maintenance.

Challenges and Limitations

- Ethical Considerations: Navigating the ethical landscape of AI decisions is complex, given the subjective nature of ethics across cultures. Current strategies involve training AI with carefully selected data and limiting robotic autonomy to address these ethical dilemmas.

- Job Displacement: The rise of robotics heralds a shift in the job market, phasing out certain roles while simultaneously creating demand for new skills and professions. This evolution reflects the double-edged sword of technological progress.

- Privacy and Security: AI robots, as data-collecting entities connected to the internet, face significant privacy and security risks. Despite these challenges, the tech community actively develops robust security measures to protect against breaches and misuse.

- Human Interaction: Bridging the gap between AI and human psychology is crucial for developing robots that can handle unpredictable human behavior safely and empathetically. Advancements in teaching machines emotional intelligence could foster deeper human-robot connections.

Future Implications and Trends

- AI Revolution in Business: With over half of organizations embracing AI, a new era of business automation is upon us. Chatbots and digital assistants are the new norm, offering real-time customer service and freeing up human creativity for higher pursuits. The Workforce Evolution: AI may reshape the job market, but it’s not just about replacement—it’s about evolution. As routine tasks are automated, new roles emerge, demanding a fresh set of skills and a renaissance in workforce education.

- Data Privacy at the Forefront: The AI-driven thirst for data has raised privacy alarms, prompting regulatory bodies to scrutinize and advocate for transparent data practices. The AI Bill of Rights stands as a testament to the importance of safeguarding personal information.

- Regulation and Responsibility: The legal landscape is adapting to AI’s challenges, balancing innovation with ethical and privacy considerations. As AI continues to evolve, so too may the regulations that guide its ethical use.

- AI’s Environmental Dilemma: AI’s potential to optimize and conserve clashes with its environmental footprint. The tech community is tasked with harnessing AI’s power responsibly, ensuring that its application in sustainability efforts doesn’t backfire.

Conclusion

At the intersection of innovation and imagination, Generative AI is leading a transformative journey in robotics, marking a significant evolution in machine intelligence. This integration not only enhances robotic functions—such as precise autonomous navigation and empathetic interactions—but also positions robots as vital partners in human progress. The AI robotics sector is experiencing rapid growth, fueled by substantial investment and optimism about future capabilities. Despite facing ethical challenges, job displacement concerns, and privacy issues, the commitment to navigate these complexities responsibly persists. Looking ahead, the synergy between Generative AI and robotics promises a future where human and machine collaboration enhances safety, efficiency, and sustainability.